Facial recognition systems can be fooled by a person wearing the glasses, made on a printer, into thinking that they are someone else.

The Daily Mail reported that researchers at US Carnegie Mellon University looking at facial recognition programmes discovered a way to fool the system, and even make it think that the person was somebody else, by disrupting the system’s “ability to read pixel colouration”. Although these systems can recognize  a person in a crowd, the glasses highlighted how attackers could override the system using technology that cost just 22 cents (€0.19) to print.

a person in a crowd, the glasses highlighted how attackers could override the system using technology that cost just 22 cents (€0.19) to print.

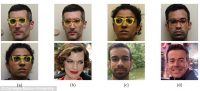

During the research, the glasses were able to allow a man impersonate actress Milla Jovovich and a “South Asian female to impersonate a Middle Eastern man” with 90 percent accuracy. The system can be fooled by changing the pixel colouration, as it uses neural networks to identify a face, and researchers investigating security attacks found that the glasses could be used on “a number of systems including white-box and black-box face recognition”, and also to avoid “face detection”.

The glasses were made using an Epson XP-830 inkjet printer, and researchers “developed an algorithm to reproduce the colours needed for impersonation”, and to guarantee the frames were “smooth and effective for misclassifying”. Initially the frames were made in a solid yellow colour, and then condensed onto the person attempting the impersonation, after which the colour of the glasses was “updated using the Gradient Descent algorithm and then attached to a pair of normal glasses”.

During the tests, the three people wearing the glasses were able to fool the system by wearing the glasses: however researchers also “demonstrated this type of attack on a commercial facial recognition system in which access is far more limited”, revealing that the system has a “90 percent success rate with the Face++ software”.

The researchers feel that their findings show the risks associate with “machine learning technology” and the need to secure these systems further. An excerpt from the research paper said: “As our reliance on technology increases, we sometimes forget that it can fail. In some cases, failures may be devastating and risk lives. Our work and previous work show that the introduction of ML [machine learning] to systems, while bringing benefits, increases the attack surface of these systems.

“Therefore, we should advocate for the integration of only those ML algorithms that are robust against evasion.”